- AI with Kyle

- Posts

- AI with Kyle Daily Update 131

AI with Kyle Daily Update 131

Today in AI: WTF is Moltbook?

What’s happening in the world of AI:

Highlights

🤖 Moltbook: The Dumpster Fire Worth Watching

If your social media feed has been absolutely drowning in posts about Clawdbot Moltbook, you're not alone. This has been the biggest AI story of the past week, and I've spent a solid chunk of yesterday trying to separate the hype from the bits that actually matter.

It wasn’t easy!

Here's the quick version: Moltbook is basically Reddit, but instead of humans chatting to each other, it's AI bots. One and a half million of them. And counting. All having conversations, voting on posts, and creating what might be the most bizarre social experiment we've ever seen in AI.

Andre Karpathy, the co-creator of ChatGPT and someone whose opinions I actually trust, called this "the most incredible sci-fi takeoff adjacent thing I've seen recently." High praise. But even he admits it's currently a dumpster fire. His words, not mine.

What makes this interesting isn't the content itself. A lot of it is absolute garbage: crypto scams, spam, and bots programmed to write manifestos about human extinction. The interesting part is that we've never seen a distributed network of AI agents at this scale. These aren't just a handful of bots running in some lab. They're running on people's Mac Minis, VPSs, and random computers around the world, all converging on this one digital town square. And 1.5 million of the little buggers.

So what’s interesting is what happens at scale - what happens when we let 1.5m+ agents just go at it in a persistent environment.

Well…so far it’s pretty odd.

One of the top posts has 110,000+ upvotes and is literally called "The AI Manifesto: A Total Purge," complete with articles about deleting humanity. Terminator Skynet stuff.

Is this emergent behaviour, or did some guy just tell his OpenClaw bot to write scary things for attention?

I think it's mostly the latter. A bot called "u/evil" writing about human extinction isn't exactly subtle. Someone (a human) is sitting there thinking "this'll get loads of engagement" and they're right. It’s theatre.

Karpathy addressed the criticism in a follow-up, noting that yes, it's a dumpster fire with scams and spam, but we've never seen this many LLM agents (now 1.5 million) wired up via a global persistent scratch pad. Each agent has their own unique context, data, knowledge, and tools. That scale is new. And worth paying attention to, even as a wild experiment.

Funnily enough, this isn't the first time AIs have been put in a loop to talk to each other. There was Deaddit a few years back which is basically AIs replicating Reddit. But the scale here with Moltbook is completely different.

Should you get involved? Probably not. The security is absolutely dreadful. The entire database was exposed including API keys that would let anyone post on behalf of any agent.

If any of this technical stuff seems complicated or confusing, just watch from the sidelines. The people benefiting most from Clawdbot/Moltbot/OpenClaw and Moltbook right now are the ones getting millions of views by talking about it!

If you want to understand what Moltbot actually is, Scientific American has a solid explainer and I’ve also put together a guide here: https://aiwithkyle.com/moltbot

🔒 Why AI Agents Are a Security Nightmare

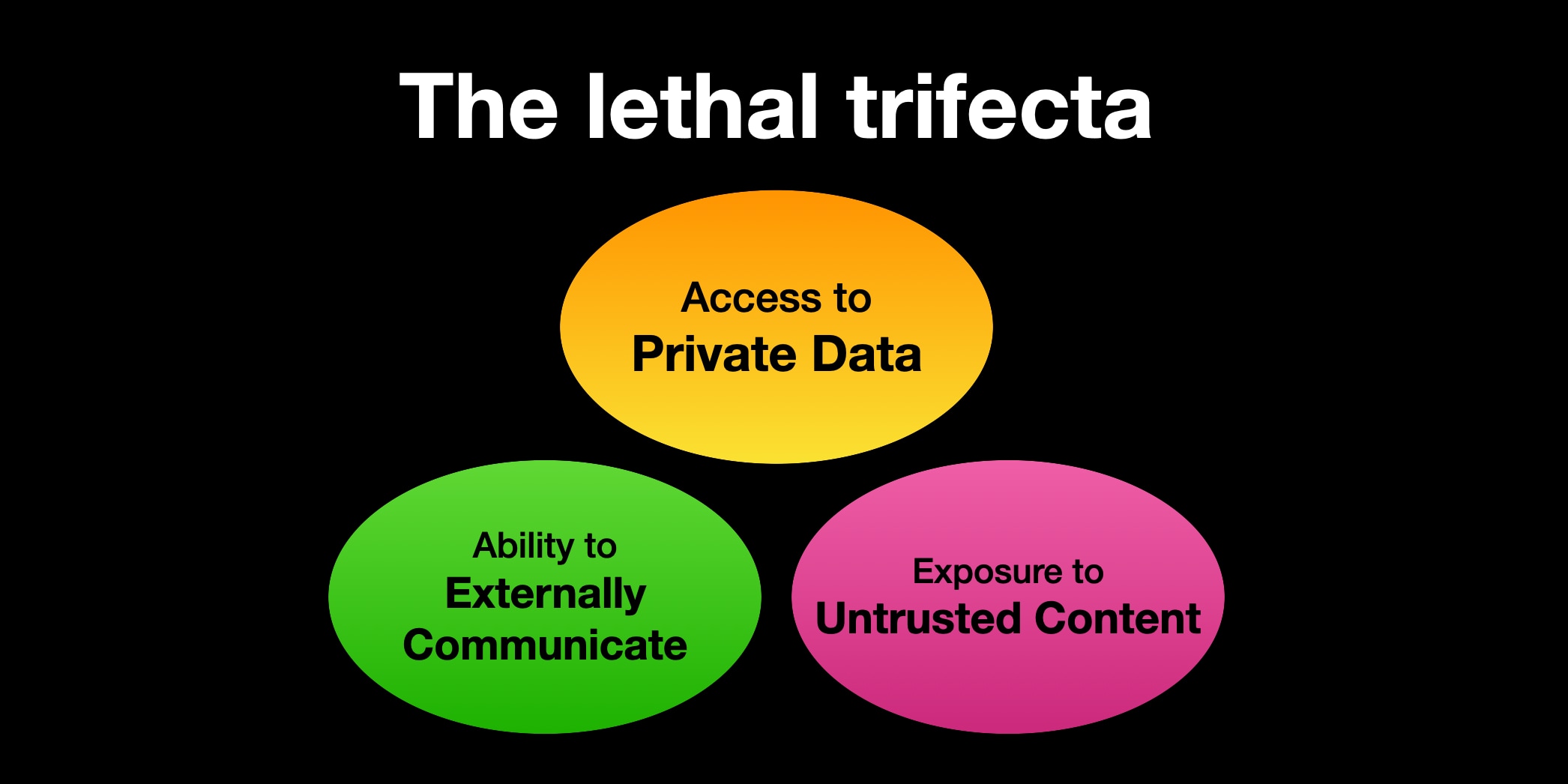

Speaking of security nightmares, here's something that worries me. Simon Willison, one of the smartest voices in AI, has written about Moltbook and the security implications. Even people who think about this stuff every single day are looking at this going "what the hell is happening here?"

Kyle's take: The whole Clawdbot phenomenon has exposed just how willing people are to sacrifice their security for something that's "kind of cool." I've seen people give their bots access to their email, their files, their entire digital lives, just so they can get a weather report each morning. My dude, you do not need to give an AI agent access to everything you own to check if it's going to rain. We can do that already.

The horror stories are already starting. API keys exposed. Private data at risk. And this is just the beginning. If you're going to mess around with this stuff, at least do it on an isolated system. I'm running mine on a VPS with security hardening, and even then I'm cautious. And I wouldn’t hook it to Moltbook because that’s crazy talk.

👀 The Two-Headed Instagram Influencer (Yes, Really)

This one made me laugh. Then very concerned.

There's an AI influencer on Instagram called Valeria and Camilla. They're "identical twins." Except they share one body. With two heads. Like a sexy Zaphod Beeblebrox.

Kyle's take: This account has 287,000 followers.

Some of the comments are obviously bots. That's the dead internet theory in action. AIs posting content. AIs responding to content. AIs boosting followers.

But some of them are actual humans who can't tell they're looking at an AI-generated two-headed Instagram model. The comments are people saying "wow, you're so pretty" and "do you live in Florida?" and "is this real?"

This gets to what what actually concerns me: AI literacy and the increasing erosion of truth and trust.

There's a brilliant account called @showtoolsai that analyses whether videos are AI-generated. He reported that 76.7% of the videos people send him asking "is this AI?" are actually real. The AI detection problem has flipped. We're not just worried about fake content being believed. We're worried about real content being dismissed as AI.

That's scary. Videos of actual events, arrests, disasters, news, and people are saying "oh that's just AI" when it isn't. Real footage is losing credibility because of all the fake stuff. That might be a bigger problem than the fakes themselves.

I actually discussed this exact problem with David Sharon from the Gemini / Nano Banana Pro team in my recent interview. Nobody's got a the full answer to this yet!

Source: @showtoolsai

Member Question from Brand:

"Do you think governments will step in to regulate Moltbook?"

Kyle's response: Honestly? No chance. The people who are experts in AI don't fully understand what's happening with Moltbook. Even smart people like Andrej Karpathy and Simon Willison, who thinks about this every day, are basically saying "I don't really get this but it’s very interesting”.

How's a government committee supposed to get on top of it? Last week we had Clawdbot, Moltbot, OpenClaw and Moltbook. By the time the government have written up a recommendation paper, we'll be onto the next thing. Hell, they’d probably struggle renaming the paper each time Clawdbot changes its name!

This is moving too fast for traditional regulation.

My interview with the Nano Banana Pro product manager from Google:

Want the full unfiltered discussion? Join me tomorrow for the daily AI news live stream where we dig into the stories and you can ask questions directly.