- AI with Kyle

- Posts

- AI with Kyle Daily Update 018

AI with Kyle Daily Update 018

TEA App Leaks 72,000 Photos, GPT-5 'Prime' Leaked, Google Adds AI Search in UK

The skinny on what's happening in AI - straight from the previous live session:

Highlights

🔒 Tea App Security Breach: Not Vibe Coding's Fault?

The Tea app (a women's dating safety platform) suffered a major data breach exposing 72,000 user photos and personal details. Bad bad bad, on so many levels. Many blamed vibe coding, but the security vulnerabilities were coded before vibe coding even existed.

Kyle's take: This is getting unfairly painted with the vibe coding brush. The Tea app's insecure storage bucket was created in 2022-2023, well before vibe coding was viable. Or even existed as a term (it was coined Feb 2025).

Yes, vibe coding can 100% create security risks if you don't know what you're doing, but this is just plain old bad coding.

The related concern? UK's new Online Safety Act is forcing companies to collect ID verification without providing centralised security - expect more breaches like this….

🔍 Google Adds AI Mode to UK Search

Here we go boys and girls.

Google quietly rolled out a dedicated "AI" tab in UK search results, sitting prominently at the front of the search options alongside "All," "Images," and "Shopping." This gives direct access to Gemini without needing a separate subscription. They are baking AI into Google Search front and centre.

Kyle's take: Google's in an impossible position - their cash cow (search advertising) is being threatened by the very AI technology they're now embracing. They're trying to have their cake and eat it, but I'm not convinced they have an overarching strategy. Different Google divisions are literally working against each other - just in the last week YouTube demonetises AI content whilst simultaneously launching Veo3 AI video tools natively in Youtube…

Source: UK rollout

Member Question from Hit and Don't Get Hit: "What actually is vibe coding?"

Kyle's response: Vibe coding means using natural language to build software instead of learning programming languages. Tools like Lovable let you literally say "build me a Pomodoro timer with 8-bit graphics" and it creates working code.

It's brilliant for rapid prototyping, but the security risks are real - in short, hackers know more about breaking into apps than vibe coders know about securing them! If you are building for business start simple, then get professional security audits before going live.

But don’t let these concerns get in the way of playing around with the tools. Especially for personal projects. Honestly it’s close to magic.

This question was discussed at [11:33] during today's live session.

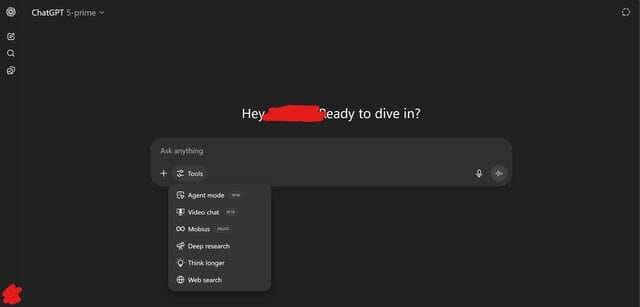

Bonus speculation: Screenshots from Australia show ChatGPT-5 Prime with new "Video Chat" and "Mobius" features. Could be fake, could be the August rollout beginning. If real, video chat likely means ChatGPT is getting an avatar face - think Character.ai but for the mainstream market.

Real or fake? We’re closing in on launch so could be legit.

Want to submit a question? Drop it below this video and I'll cover it in a future live.

Want the full unfiltered discussion? Join me tomorrow for the daily AI news live stream where we dig into the stories and you can ask questions directly.